More E.T., More Marty McFly

What 80s Movies Taught Me About AI, Humanity, and the Future of Education

It’s movie blockbuster season—and there’s nothing quite like watching Tom Cruise sprint for his life to remind us that summer has arrived.

Popcorn in hand, eyes wide, we soak up stories that push the boundaries of what’s possible. But for me, two films have always stood apart—not for their explosions or stunts, but for what they taught me about empathy, courage, and imagination.

(And yes, I can already feel my wife rolling her eyes as I am going to use ’80s movies to make my point… but hey, it’s Father’s Day weekend—so I’m going with it.)

It was the summer of 1985. I was five years old, sitting in a packed theatre with my mom, watching E.T. during its re-release. I didn’t fully understand the dialogue, but one thing struck me hard: why were the adults being so mean to him? Why were they dissecting, isolating, probing? E.T. wasn’t a threat—he was vulnerable, curious, trying to connect….E.T only wanted to go home!

We walked home from the downtown theatre and I was inconsolable. My grandfather was furious with my mom for bringing me to such a movie—though that’s a story for another time. Still makes me smile today.

That film lit something in me. A lifelong lens for equity. A gut-level belief that inclusion isn’t policy—it’s presence. And that some of the most powerful things in life can’t be measured, coded, or contained.

It’s about heart—our humanity. What defines us isn’t efficiency, logic, or optimization. It’s compassion, courage, and the willingness to stand beside those who are different or misunderstood.

That moment became the seed of my commitment to civil rights, to teaching history honestly, to exploring world issues with students, and to stepping into movements that demand more from us than silence.

A few summers later, Back to the Future showed me something else: that the future isn’t fixed—it’s something we imagine, shape, and take responsibility for. Marty McFly didn’t have it all figured out, but he had guts, creativity, and a sense of what mattered.

If E.T. taught me to care, Back to the Future taught me to act.

That intersection—where empathy meets design, and where our humanity drives our choices—is where education must stand today.

Because in the age of AI, what will define us isn’t how well we use the tools—but how deeply we hold on to what makes us human.

What Are We Actually Teaching?

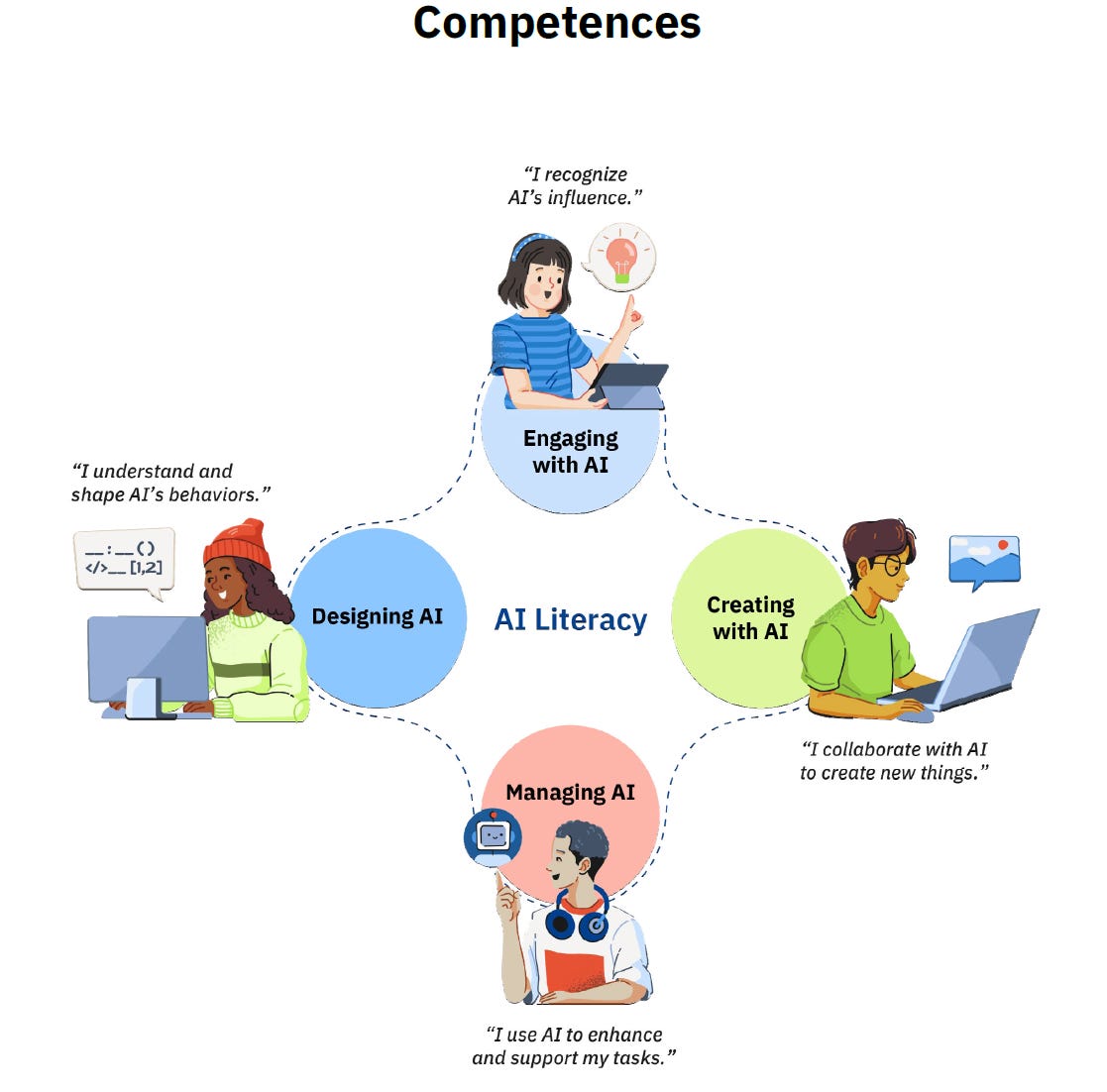

The newly released AI Literacy Framework—developed by the OECD, European Commission, and TeachAI (Empowering Learners for the Age of AI, 2025)—is a strong, globally-informed document. It outlines four core competencies that define what AI fluency should look like across primary and secondary schools:

Engaging with AI – understanding and evaluating it.

Creating with AI – using it to generate and refine ideas.

Managing AI – delegating tasks, setting goals, and aligning outputs with human values.

Designing AI – shaping how AI functions and interacts with the world.

These are practical & necessary and like I have said before, provide a solid foundation for teaching with and about AI in a meaningful way.

But as I sat with the framework—and reflected on my own early lessons from E.T. and Back to the Future—I kept coming back to one question:

Are we also teaching students who they are in relationship to these tools?

What’s missing isn’t a fifth competency in the technical sense—but a foundational layer that must sit beneath them all: a way of helping students navigate AI with clarity, integrity, and humanity.

Navigating AI with Humanity

If Back to the Future was about what’s possible, then this moment in education asks us to go one step further: to teach students how to navigate uncertainty with integrity.

Not as a bonus skill. Not as a soft add-on. But as the core of how we raise young people to live alongside AI—and still lead human lives.

Think of Marty McFly, stranded in 1955, making a skateboard out of a kid’s wooden scooter, dodging bullies, rewriting timelines, and doing it all while staying true to who he was. That’s the kind of agility our students will need. Heck, that’s the kind of agility we will all need. We won’t just need to know how to use AI—we will need to know who we are in relationship to it, and how to move when the ground shifts beneath us.

We need to prepare for:

Holding space for grief, joy, and complexity—experiences AI will never truly interpret.

Listening to silence, nuance, and body language—the kind of intelligence E.T. evoked in us.

Noticing injustice and responding with conscience, even when the algorithm says “optimize.”

Slowing down to reflect, not just react.

Asking not just what can be automated, but what must remain human.

This isn’t about resisting AI. It’s about grounding ourselves in ethical imagination, so that we don’t just use technology—but shape it with intention.

Because in a world of prediction and pattern, it’s our children’s presence, their moral clarity, and their ability to navigate that will define the future.

Beyond Fluency: Toward Wisdom

The current framework offers a strong scaffolding—but scaffolding isn’t the cathedral.

To truly prepare learners for the age of AI, we need to move from AI fluency—understanding how it works and how to use it—toward AI wisdom: knowing when to pause, question, or say no.

As Victor Lee (Stanford) reminds us, large language models can be powerful creativity amplifiers—like thought partners in a design studio—but they’re not the source of insight. That still begins with us. (OECD, 2025, p. 30)

This deeper layer is also where students begin to reclaim agency in an AI-saturated world:

When a student challenges a gender-biased chatbot response, they’re not just thinking critically—they’re practicing moral courage.

When a teenager uses AI to brainstorm ideas for a short film, then chooses to scrap the output and film something raw and personal instead, they’re not just creating—they’re honoring authenticity.

When a child asks why her app keeps recommending beauty filters, she’s doing the emotional labour of resisting quiet manipulation.

This is the kind of wisdom we can’t afford to leave out: the human judgment, clarity, and ethical grounding that technology alone will never provide.

More E.T., More Marty

It begins with us—the adults.

We cannot act like the cold scientists in E.T., obsessed with classification, control, and outcomes. We need to act like Elliott: brave enough to love what we don’t understand, to protect what can’t defend itself, and to believe that connection matters as much as performance.

We must model for our children that not everything meaningful can be reduced to a rubric. That truth isn’t always in the data. That real learning is relational before it is computational.

And we need some of Marty McFly’s spirit too—not to escape the present, but to shape the future with what we already have: creativity, curiosity, and conviction. He didn’t wait for permission—he acted with heart, even when the path was unclear.

That’s our challenge now.

Let’s keep building fluency. But let’s not forget the deeper layer—the part no machine can replicate. The part that guides us when the path is uncertain: presence, discernment, imagination, and care.

Let’s not just teach students to coexist with AI.

Let’s teach them to lead—with humanity as their compass, and the courage to heal, not just build.

Because in the end, what our children need isn’t just smarter systems—it’s adults who, like Elliott, recognize the light in another being and dare to reach out.

Like E.T.’s finger—small, glowing, healing—it’s that human touch that shows us the way home.

This piece was edited with the help of ChatGPT, used as a writing and thinking partner for clarity—not as a substitute for voice.

"It’s about heart—our humanity. What defines us isn’t efficiency, logic, or optimization. It’s compassion, courage, and the willingness to stand beside those who are different or misunderstood."

Imagine building this as school vision or mission.

Let AI do the organization and processing of information for you. Take that, mix it socratically with your peers, come to a human-based level knowledge, and the act in your community with what you know.

That is the AI Literacy we need. How to use it for what is good in action, and community. That is where it helps us find purpose and meaning.