The Myth of the Digital and now AI Native

Why Intentional AI Integration in Education is Critical

Dr. Sabba Quidwai recently warned, “Assuming kids are fluent in AI because they’ve touched ChatGPT is like assuming I’m a mechanic because I’ve driven a car.” This metaphor captures the dangerous fallacy pervading modern education: that today's youth, raised on screens, inherently understand technology and artificial intelligence (AI).

In truth, most students are navigating a digital and AI-driven world without truly understanding its rules, risks, or possibilities. Without intentional scaffolding, we miss the opportunity to help them build the digital resilience, critical thinking, and ethical grounding needed to engage with it responsibly. I’ve been in schools around the world where students are scrolling TikTok, snapping to keep a streak on Snapchat, and writing entire emails in the subject line—all at once. Digital and AI fluency—understood as both literacy and an awareness of how the system works—is not a given. It’s a continuum, and the goal should be to move as many young people as possible closer to true fluency.

The label "digital native," once reassuring, and now “AI native”, is now dangerously misleading.

Misplaced Assumptions, Deep Consequences

For nearly twenty years, we’ve told ourselves that today’s students “just get” technology. After all, they’ve grown up with smartphones in hand, seamlessly navigating apps, games, and social platforms. But this assumption has lulled us into complacency. What looks like fluency is often just familiarity—surface-level skills that mask deeper gaps in understanding.

Stanford’s 2025 AI Index Report brings this into sharp focus: while 81% of K–12 computer science teachers in the U.S. believe AI should be part of foundational education, fewer than half feel prepared to teach it. The message is clear—we can’t rely on exposure alone. Without deliberate efforts to build understanding, we risk leaving a generation underprepared for a world shaped by algorithms they use daily but don’t truly understand.

Students are beginning to feel that gap themselves. Many express uncertainty—even anxiety—about whether they’re actually ready for an AI-driven workplace. And higher education leaders are sounding the same alarm: if we don’t act now to bridge that divide, we’ll graduate students fluent in devices, but not in the systems that will define their futures.

The term “digital native” deceptively transfers responsibility for digital literacy onto students themselves, fostering complacency within educational institutions. This mirrors how we handled cellphone integration over the past 25 years—declaring it "everyone's responsibility" but effectively making it no one's priority. The results have been fragmented policies, inconsistent practices, and ultimately, unprepared graduates.

Even worse, the very culture this hands-off approach has fostered in our classrooms globally has led to outright cellphone bans during school hours—as if learning to navigate technology can be paused between 8 a.m. and 3 p.m. Meanwhile, digital life continues uninterrupted through wearables, private chats, and algorithmic feeds the moment students step outside. Don’t get me wrong, we have a true problem with Digital classroom culture that is done with intent and responsibility across the world. But, this isn’t just avoidance—it’s a missed teaching moment. And for students without access to structured guidance or digital/AI literacy at home, it deepens an already growing equity divide. By banning instead of teaching, we aren’t protecting students—we’re abandoning them.

Cellphone Integration and the Missed Opportunity

Dr. Michael Rich, Director of the Digital Wellness Lab at Boston Children's Hospital, emphasizes how our early adopter approach to cellphone integration overlooked critical scaffolding around digital wellness. Rich highlights the rise of a pervasive phenomenon known as Fear of Being Left Out (FOBLO), fueled by social media algorithms that manipulate attention and amplify comparison. This constant digital pressure contributes to addiction, anxiety, and a fractured sense of self—as students increasingly live in two parallel realities: a physical world they inhabit, and a curated virtual world that often bears little resemblance to the truth. Our reactive rather than proactive stance has amplified digital culture's pressures, making students believe everyone else's lives are perfect—a damaging misconception which honestly, many of us model when we look for the perfect family picture to post or story to tell on our social media feeds.

To avoid repeating these mistakes, educational integration of AI must proactively incorporate scaffolded guardrails and teachings. This intentional approach requires embedding digital/AI fluency deeply into curricula, explicitly addressing how digital culture impacts mental health and perceptions, teaching citizenship, being able to tell truth from fiction and so much more…

Unscaffolded tech use has made students vulnerable to comparison culture, anxiety, and distraction. We need guardrails—not gadgets.

The Catastrophic Failure of Collective Responsibility

When responsibility is diffuse, it becomes nonexistent. Schools across jurisdictions have left digital fluency largely unstructured. Now, we face the Age of AI… what are we going to do?

The assumption was that students would figure it out independently or through sporadic or a few classes of instruction throughout their schooling. However, as Geoffrey Hinton, Nobel prize winner and a pioneer in deep learning who is considered the godfather of AI, warns, "Once these artificial intelligences get smarter than us, they will take control and they will make us irrelevant." This underscores the urgency for structured and proactive educational responses to AI's pervasive influence as many have said AI won’t replace people, but they will replace the people that don’t integrate it properly.

We are witnessing the consequences today: widespread AI anxiety among society, insufficient preparedness for an AI-enabled workforce or planning for the collateral damage of AI integration into this workforce, and pervasive misinformation vulnerabilities that has been demonstrated all over their world in our elections. Compounding the issue, teachers, school leaders, and system leaders, themselves are feeling ill-equipped to integrate these rapidly evolving AI world meaningfully creating a cycle of apprehension and feeling of inadequate adoption.

Redefining Education with Intentionality

To pivot effectively, we must fundamentally rethink education's purpose. Public education jurisdictions must explicitly define this purpose, especially concerning digital, AI, and data fluency. Instead of superficial interactions, teachers, students, parents, community must learn to critically engage, ethically question, and creatively utilize technology and AI while aligning their values and views on what is the purpose of education to whatever approach is decided.

What students truly need isn't merely more human-delivered content, but strategic guidance in navigating a world where advanced machine intelligence increasingly intersects with human activity, just like Robert Downey Jr. character Ironman in the Marvel movies and his interactions with his Co-intelligent AI driven assistant Jarvis. This isn't merely about improving standardized test outcomes but equipping students for survival, agency, and meaning in a rapidly changing landscape while understanding what it means to have this type of co-intelligence in your back pocket.

Real-World AI Use vs. Real Literacy: The HBR Lens

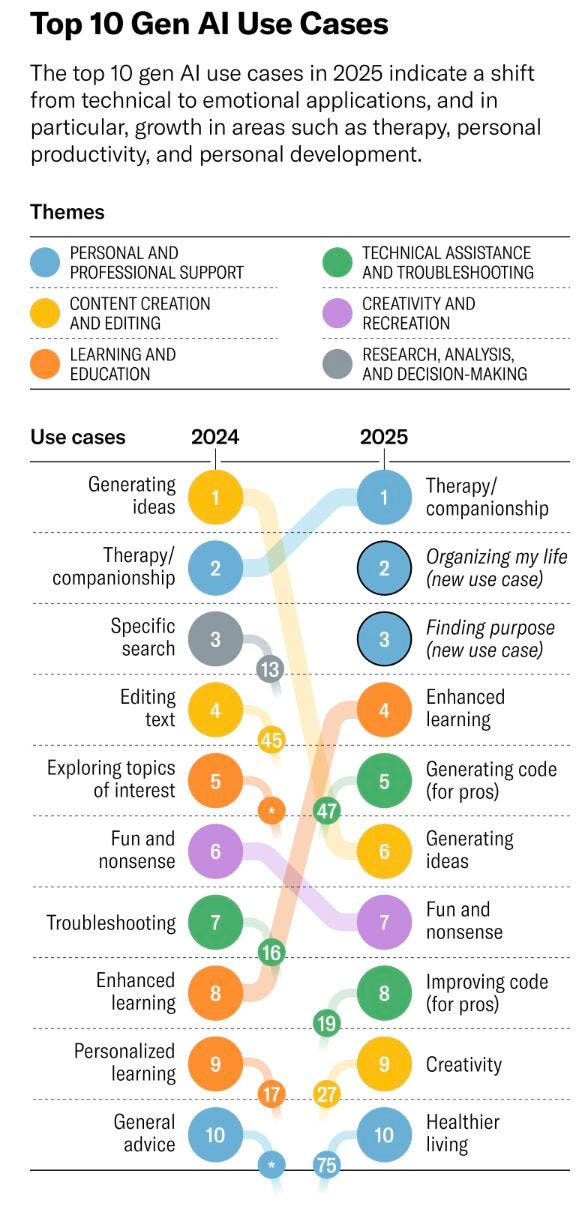

The recent Harvard Business Review article "How People Are Really Using Gen AI in 2025" echoes many of these concerns. It reveals that while GenAI tools are widely adopted, most users engage with them superficially—using AI to write emails, summarize documents, or brainstorm ideas. Few in society understand how these tools work or can critically assess their outputs.

The article includes compelling visuals showing a significant gap between usage and mastery. For example, professionals in marketing, education, and finance are using GenAI for task automation—but only a minority report feeling confident in evaluating the quality, bias, or ethics of the content produced. This directly maps onto K–12 environments, where students may be adept at prompting AI but remain ill-equipped to question its limitations.

These findings reinforce the myth-busting argument: exposure does not equal literacy. Without structured learning, today’s AI usage trends will widen inequities and deepen dependency, rather than empower learners. It solidifies the need for intentional integration in pedagogy or assessment so that it amplifies learning. We don’t want it to become a crutch, this will push cognitive atrophy.

Most GenAI usage today is surface-level. Literacy isn’t access—it’s agency.

Innovative Approaches at the Higher levels

High schools and universities have been at the forefront of AI's disruptive impact on education. This disruption compels us to critically evaluate and redefine our educational frameworks to align with contemporary realities. This includes reexamining K–12 literacy continuums, pedagogical approaches, academic integrity, and assessment methodologies. Ethan Mollick, a professor at the Wharton School, advocates for a proactive and integrated approach to AI in education. He suggests that teachers should "integrate AI into everything you do," treating it as a collaborative partner in the learning process . Mollick emphasizes that AI should not replace teachers but rather augment their capabilities, allowing them to focus more on fostering critical thinking, ethical reasoning, and emotional intelligence among students.

This paradigm shift positions teachers as essential facilitators who guide students through the complexities of human-AI interactions. By embracing AI as a tool for personalized learning and adaptive instruction, teachers can create more engaging and effective learning environments. The goal is not to diminish the role of teachers but to empower them to cultivate wisdom, discernment, and resilience in their students, preparing them for a future where AI is an integral part of the societal fabric.

But it cannot just be dumped on teachers to integrate, we must approach this from all sides of the spectrum of our education systems, and make sure that we tackle this holistically, without any silos, and with full alignment.

From Anxiety to Agency: A Vision for Responsible Integration

As Dr. Sabba Quidwai succinctly states, we have a collective responsibility to transform student anxiety about AI into agency. I would argue that we have a responsibility in our educational system to do this with all stakeholders. Public education systems must commit to explicit training, clear policy frameworks, and opportunities for students to actively lead in the AI era. This approach fosters genuine digital and AI literacy, equipping students to critically engage with technology rather than passively consuming it.

Every jurisdiction should prioritize:

Establishing clear digital and AI education standards from early childhood through graduation and higher learning.

Providing comprehensive, continuous professional development for teachers and all stakeholders (support staff, school leaders etc.)

Facilitating meaningful student engagement through project-based and inquiry-driven experiences while keeping the elements that have always work and that are foundational to any students learning.

Creating robust ethical frameworks guiding technology and AI use in schools.

Rejecting Myths, Embracing Intentionality

The myth of the digital native isn’t merely incorrect; it’s dangerously misleading and we must not make the same mistake in this Age of AI. True digital and AI fluency demands intentional, structured education, preparing students not just for assessments, but for the complexities of the real world in a holistic way. This requires clear, committed leadership at every educational level, redefining public education as a proactive partner in the future—not a passive observer. We owe this to our students, our teachers, and ultimately, our collective societal future.